We create content all day. We take pictures, write comments on articles, or even the articles themselves. We save bookmarks, or press some like or heart buttons. Even if this content is of no value to anyone else, at least it is to ourselves. Still, we trust other parties, commercial ones, with servers often in other countries, to give us back our content any time of the day with the click of a button. Even if they want to, they may not always be able to do so. In my previous post I wrote that we put too much trust in the internet.

Would it not be nice if all this content was on our own computer hardware, that we could easily categorize and search it, and share copies with our friends? I would like to share some ideas that I have to make this technically possible, so this is going to be a technical article for that reason.

A database is needed to store our content as well as the metadata. The database needs to be able to allow for an extremely flexible shema, that ideally can be extended on the fly by end users. We also want to interact with other users, so URIs would be needed for shared concepts. Connecting concepts means we are talking about a graph database. It should be implementation agnostic and serialize to a text format to be saved to disk in a sort-of-human-readable format. It should be obvious by now that I am talking about an RDF triple store.

Metadata and textual content can easily be stored here. The question is if you want all your content to go there. For photographs, a low resolution image or thumbnail could be added. But full resolution images or even videos will be too big to be practical. Instead, we can make a SHA2 hash of them and only save the metadata, pointing to the original file on the filesystem. The SHA2 adds the benefit that it would count as the basis for a URI, because it will be (practically) globally unique by the nature of SHA2. Also, a derivative work will have a different hash, and get another URI.

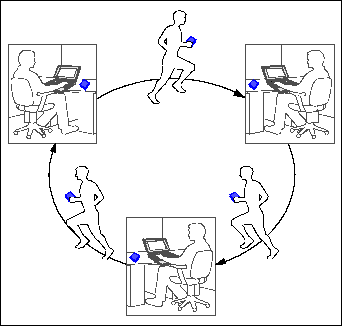

There will be many sources of content. Ideally, websites would eventually add a "save" button next to the existing Facebook and other "like" buttons, that save them as bookmarks in your personal content graph. Alternatively, a browser plugin could be made to do the same.

Local subdirectories should be scanned automatically, and metadata should be saved when new files arrive. For pictures and others, this would include the EXIF data, for which ExifTool is already a very good analyzer that is capable of outputting an RDF format.

Remote social web sites should be connected, using their APIs to retrieve the user's content from there. The list of websites is potentially endless, but would include Facebook, Google, Twitter and Flickr. Sites like these oppose scraping, but we are talking about a user's own content here. For Android devices, an app's Intent could be made that registers to wildcard URLs. It would show up as a destination for sharing, and simply save the shared URL.

There are many possible targets that could benefit from a structured, extensive content repository. People would be able to browse their own history like a diary, and read back anything they ever added. People with a website could make it much more interesting and better up to date when it is backed by the same repository. As long as other media like Facebook and Twitter are online, it will be easy to post there. When you create an item with a headline, an image and some text, it can easily be shared as such on Facebook or Twitter, and link back to the website.

Sharing with friends would be as simple as copying a named graph. This would also ensure that there is a another copy if yours is lost. Of course even without sharing, you could make an off-site backup, to save it for yourself or your children.

Once we interconnect our repositories, new possibilities show up. This is where we are realizing the promise of the Semantic Web, Web 3.0 or the Giant Global Graph (GGG). Here we begin to create a common knowledge center. Now we can see what other people state about a resource, be it an article or a product. We can warn each other for bad experiences, but also debunk false facts and news that currently pollute the social web. In fact, all knowlegde a person has can be modeled by himself and shared with all of mankind to build on.

I think all the technology exists to make a system that allows all the above. In fact, I think it could be made by a handful of people in an overseeable period. Yesterday, Merck, the 7th largest pharmaceutical in the world, open sourced their implementation of a triple store. It is based on the popular open source triple store RDF4J, and allows massive scalability by adding the also open source Apache Hadoop and HBase. Merck uses it for its enormous knowledge base. For sure it would allow a large number of people to store and share their content.